[ad_1]

The 69th Annual IEEE International Electron Device Meeting is ready to start out on 9 December, and the convention teaser reveals that researchers have been extending the roadmap for numerous applied sciences, notably these used to make CPUs and GPUs.

As a result of chip firms can’t carry on increasing transistor density by cutting down chip options in two dimenstions, they’ve moved into the third dimension by stacking chips on prime of one another. Now they’re working to construct transistors on prime of one another inside these chips. Subsequent, it seems possible, they’ll squeeze nonetheless extra into the third dimension by designing 3D circuits with 2D semiconductors, equivalent to molybdenum disulfide. All of those applied sciences will possible serve machine learning, an software with an ever-growing urge for food for processing energy. However different analysis to be offered at IEDM reveals that 3D silicon and 2D semiconductors aren’t the one issues that may preserve neural networks buzzing.

3D Chip Stacking

Growing the variety of transistors you may squeeze right into a given space by stacking up chips (referred to as chiplets on this case) is each the present and way forward for silicon. Typically, producers are striving to extend the density of the vertical connections between chips. However there are issues.

One is a change to the position of a subset of chip interconnects. Starting as quickly as late 2024 chipmakers will begin constructing energy supply interconnects beneath the silicon, leaving knowledge interconnects above. This scheme, referred to as backside power delivery, has all kinds of penalties that chip firms are figuring out. It seems like Intel can be speaking about bottom energy’s penalties for 3D gadgets [see below for more on those]. And imec will look at the results for a design philosophy for 3D chips referred to as system technology cooptimization (STCO). (That’s the concept that future processors can be damaged up into their fundamental features, every operate can be by itself chiplet, these chiplets will every be made with the right know-how for the job, after which the chiplets can be reassembled right into a single system utilizing 3D stacking and different superior packaging tech.) In the meantime, TSMC will handle a long-standing fear in 3D chip stacking—the way to get warmth out of the mixed chip.

[See the May 2022 issue of IEEE Spectrum for more on 3D chip stacking technologies, and the September 2021 issue for background on backside power.]

Complementary FETs and 3D Circuits

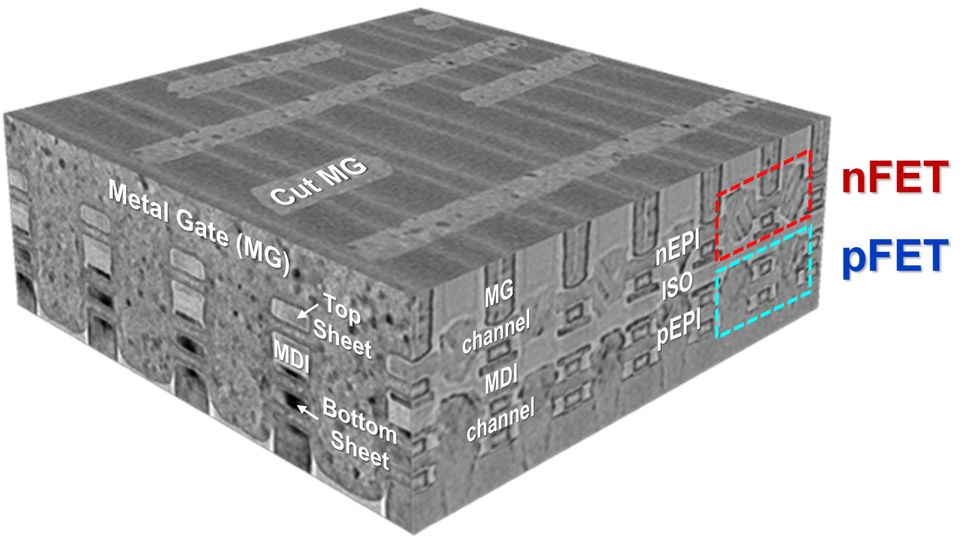

TSMC is detailing a complementary FET (CFET) that stacks an nFET on prime of a pFET.

TSMC

With main producers of superior chips shifting to some type of nanosheet (or gate-all-around) transistor, analysis has intensified on the machine that may comply with—the monolithic complementary field effect transistor, or CFET. This machine, as Intel engineers defined within the December 2022 issue of IEEE Spectrum, builds the 2 flavors of transistor wanted for CMOS logic—NMOS and PMOS—on prime of one another in a single, built-in course of.

At IEDM, TSMC will showcase its efforts towards CFETs. They declare enhancements in yield, which is the fraction of working gadgets on a 300-mm silicon wafer, and in cutting down the mixed machine to extra sensible sizes than beforehand demonstrated.

In the meantime, Intel researchers will element an inverter circuit constructed from a single CFET. Such circuits might doubtlessly be half the scale of their unusual CMOS cousins. Intel will even clarify a brand new scheme to provide CFETs which have totally different numbers of nanosheets of their NMOS and PMOS parts.

2D Transistors

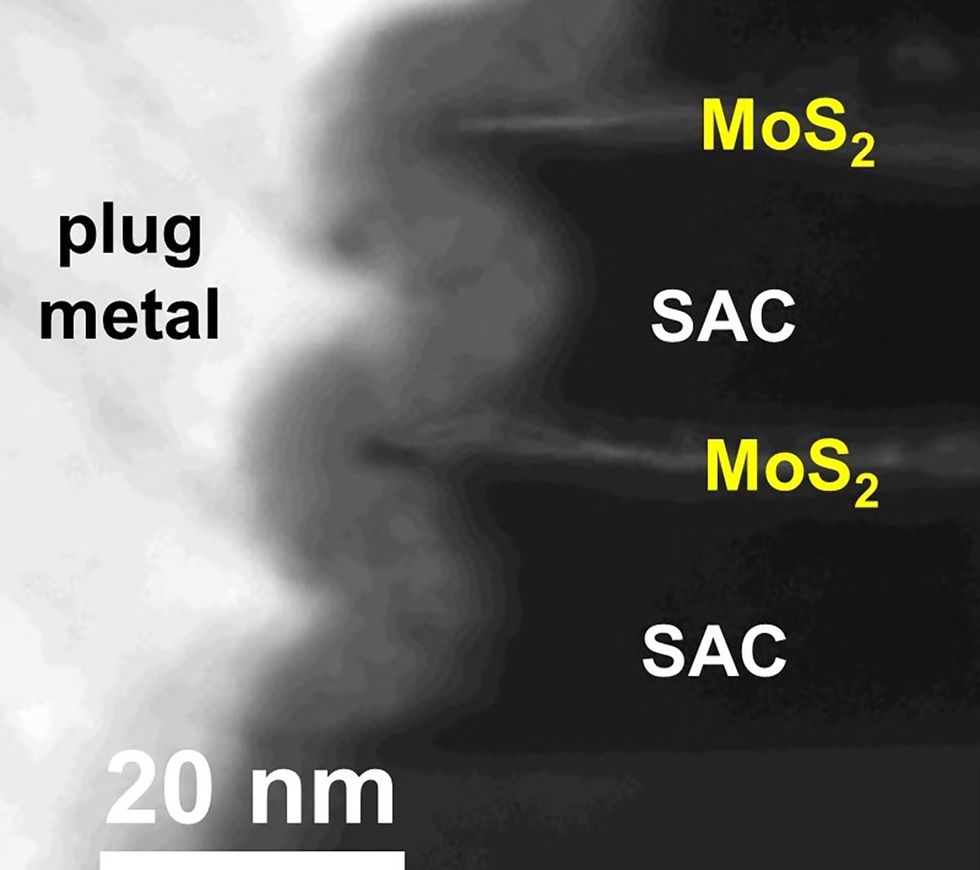

Steel contacts mildew across the fringe of a 2D semiconuctor (MoS2) to create a lower-resistance connection.

TSMC

Cutting down nanosheet transistors (and CFETs, too) will imply ever-thinner ribbons of silicon on the coronary heart of transistors. Ultimately, there gained’t be sufficient atoms of silicon to do the job. So researchers are turning to materials that are semiconductors even in a layer that’s just one atom thick.

Three issues have dogged the idea that 2D semiconductors could take over from silicon. One is that it’s been very tough to provide (or switch) a defect-free layer of 2D semiconductor. The second is that the resistance between the transistor contacts and the 2D semiconductor has been manner too excessive. And eventually, for CMOS you want a semiconductor that may conduct each holes and electrons equally nicely, however no single 2D semiconductor appears to be good for each. Analysis to be offered at IEDM addresses all three in a single kind or one other.

TSMC will current analysis into stacking one ribbon of 2D semiconductor atop one other to create the equal of a 2D-enabled nanosheet transistor. The efficiency of the machine is unprecedented in 2D analysis, the researchers say, and one key to the end result was a brand new, wrap-around form for the contacts, which lowered resistance.

TSMC and its collaborators will even current analysis that manages to provide 2D CMOS. It’s completed by rising molybdenum disulfide and tungsten diselenide on separate wafers after which transferring chip-size cutouts of every semiconductor to kind the 2 forms of transistors.

Reminiscence Options for Machine Studying

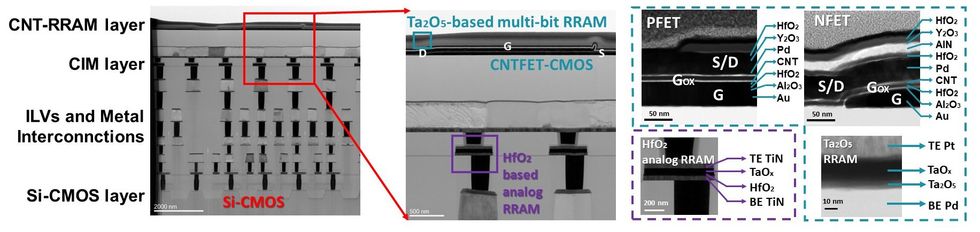

Researchers in China constructed a machine studying chip that integrates layers of carbon nanotube transistors, silicon, and reminiscence (RRAM).

Tsinghua College/Peking College

Among the many largest points in machine studying is the motion of knowledge. The important thing knowledge concerned are the so-called weights and activations that outline the power of the connections between synthetic neurons in a single layer and the knowledge that these neurons will go to the subsequent layer. Top GPUs and other AI accelerators prioritize this downside by preserving knowledge as shut as they will to the processing parts. Researchers have been engaged on a number of methods to do that, equivalent to shifting a number of the computing into the reminiscence itself and stacking memory elements on top of computing logic.

Two cutting-edge examples caught my eye from the IEDM agenda. The primary is the usage of analog AI for transformer-based language fashions (ChatGPT and the like). In that scheme, the weights are encoded as conductance values in a resistive reminiscence ingredient (RRAM). The RRAM is an integral a part of an analog circuit that performs the important thing machine studying calculation, multiply and accumulate. That computation is completed in analog as a easy summation of currents, doubtlessly saving large quantities of energy.

IBM’s Geoff Burr defined analog AI in depth within the December 2021 issue of IEEE Spectrum. At IEDM, he’ll be delivering a design for methods analog AI can sort out transformer fashions.

One other attention-grabbing AI scheme arising at IEDM originates with researchers at Tsinghua College and Peking College. It’s primarily based on a three-layer system that features a silicon CMOS logic layer, a carbon nanotube transistor and RRAM layer, and one other layer of RRAM produced from a unique materials. This mix, they are saying, solves an information switch bottleneck in lots of schemes that search to decrease the ability and latency of AI by constructing computing in reminiscence. In checks it carried out an ordinary picture recognition job with the same accuracy to a GPU however nearly 50 instances sooner and with about 1/fortieth the vitality.

What’s notably uncommon is the 3D stacking of carbon nanotube transistors with RRAM. It’s a know-how the U.S. Protection Superior Analysis Initiatives Company spent millions of dollars developing into a commercial process at SkyWater Technology Foundry. Max Shulaker and his colleagues defined the plan for the tech within the July 2016 issue of IEEE Spectrum. His workforce constructed the first 16-bit programmable nanotube processor with the know-how in 2019.

From Your Website Articles

Associated Articles Across the Net

[ad_2]