[ad_1]

This yr, 2023, will most likely be remembered because the yr of generative AI. It’s nonetheless an open query whether or not generative AI will change our lives for the higher. One factor is definite, although: New artificial-intelligence instruments are being unveiled quickly and can proceed for a while to come back. And engineers have a lot to realize from experimenting with them and incorporating them into their design course of.

That’s already taking place in sure spheres. For Aston Martin’s

DBR22 idea automotive, designers relied on AI that’s built-in into Divergent Technologies’ digital 3D software program to optimize the form and structure of the rear subframe elements. The rear subframe has an natural, skeletal look, enabled by the AI exploration of varieties. The precise elements have been produced by way of additive manufacturing. Aston Martin says that this technique considerably lowered the burden of the elements whereas sustaining their rigidity. The corporate plans to make use of this similar design and manufacturing course of in upcoming low-volume automobile fashions.

NASA analysis engineer Ryan McClelland calls these 3D-printed elements, which he designed utilizing industrial AI software program, “developed buildings.” Henry Dennis/NASA

NASA analysis engineer Ryan McClelland calls these 3D-printed elements, which he designed utilizing industrial AI software program, “developed buildings.” Henry Dennis/NASA

Different examples of AI-aided design may be present in

NASA’s space hardware, together with planetary devices, area telescope, and the Mars Pattern Return mission. NASA engineer Ryan McClelland says that the brand new AI-generated designs could “look considerably alien and bizarre,” however they tolerate larger structural masses whereas weighing lower than standard elements do. Additionally, they take a fraction of the time to design in comparison with conventional elements. McClelland calls these new designs “developed buildings.” The phrase refers to how the AI software program iterates by way of design mutations and converges on high-performing designs.

In these sorts of engineering environments, co-designing with generative AI, high-quality, structured knowledge, and well-studied parameters can clearly result in extra inventive and more practical new designs. I made a decision to offer it a strive.

How generative AI can encourage engineering design

Final January, I started experimenting with generative AI as a part of my work on cyber-physical programs. Such programs cowl a variety of purposes, together with good properties and autonomous automobiles. They depend on the combination of bodily and computational elements, normally with suggestions loops between the elements. To develop a cyber-physical system, designers and engineers should work collaboratively and suppose creatively. It’s a time-consuming course of, and I puzzled if AI mills might assist develop the vary of design choices, allow extra environment friendly iteration cycles, or facilitate collaboration throughout totally different disciplines.

Aston Martin used AI software program to design elements for its DBR22 idea automotive. Aston Martin

Aston Martin used AI software program to design elements for its DBR22 idea automotive. Aston Martin

After I started my experiments with generative AI, I wasn’t searching for nuts-and-bolts steerage on the design. Relatively, I needed inspiration. Initially, I attempted textual content mills and music mills only for enjoyable, however I ultimately discovered picture mills to be the most effective match. A picture generator is a sort of machine-learning algorithm that may create photos primarily based on a set of enter parameters, or prompts. I examined a lot of platforms and labored to know find out how to kind good prompts (that’s, the enter textual content that mills use to provide photos) with every platform. Among the many platforms I attempted have been

Craiyon, DALL-E 2, DALL-E Mini, Midjourney, NightCafé, and Stable Diffusion. I discovered the mixture of Midjourney and Secure Diffusion to be the most effective for my functions.

Midjourney makes use of a proprietary machine-learning mannequin, whereas Secure Diffusion makes its supply code accessible without cost. Midjourney can be utilized solely with an Web connection and provides totally different subscription plans. You possibly can obtain and run Secure Diffusion in your laptop and use it without cost, or you’ll be able to pay a nominal payment to make use of it on-line. I exploit Secure Diffusion on my native machine and have a subscription to Midjourney.

In my first experiment with generative AI, I used the picture mills to co-design a self-reliant jellyfish robotic. We plan to construct such a robotic in my lab at

Uppsala University, in Sweden. Our group focuses on cyber-physical programs impressed by nature. We envision the jellyfish robots gathering microplastics from the ocean and performing as a part of the marine ecosystem.

In our lab, we sometimes design cyber-physical programs by way of an iterative course of that features brainstorming, sketching, laptop modeling, simulation, prototype constructing, and testing. We begin by assembly as a staff to provide you with preliminary ideas primarily based on the system’s supposed function and constraints. Then we create tough sketches and fundamental CAD fashions to visualise totally different choices. Essentially the most promising designs are simulated to research dynamics and refine the mechanics. We then construct simplified prototypes for analysis earlier than setting up extra polished variations. In depth testing permits us to enhance the system’s bodily options and management system. The method is collaborative however depends closely on the designers’ previous experiences.

I needed to see if utilizing the AI picture mills might open up potentialities we had but to think about. I began by attempting varied prompts, from obscure one-sentence descriptions to lengthy, detailed explanations. At first, I didn’t know find out how to ask and even what to ask as a result of I wasn’t accustomed to the software and its talents. Understandably, these preliminary makes an attempt have been unsuccessful as a result of the key phrases I selected weren’t particular sufficient, and I didn’t give any details about the type, background, or detailed necessities.

Within the writer’s early makes an attempt to generate a picture of a jellyfish robotic [image 1], she used this immediate:

Within the writer’s early makes an attempt to generate a picture of a jellyfish robotic [image 1], she used this immediate:

underwater, self-reliant, mini robots, coral reef, ecosystem, hyper sensible.

The writer acquired higher outcomes by refining her immediate. For picture 2, she used the immediate:

jellyfish robotic, plastic, white background.

Picture 3 resulted from the immediate:

futuristic jellyfish robotic, excessive element, dwelling below water, self-sufficient, quick, nature impressed.Didem Gürdür Broo/Midjourney

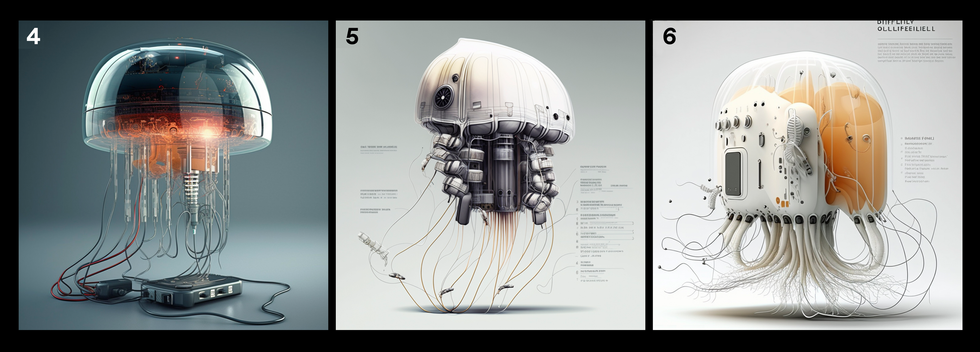

Because the writer added particular particulars to her prompts, she acquired photos that aligned higher along with her imaginative and prescient of a jellyfish robotic. Photographs 4, 5, and 6 all resulted from the immediate:

Because the writer added particular particulars to her prompts, she acquired photos that aligned higher along with her imaginative and prescient of a jellyfish robotic. Photographs 4, 5, and 6 all resulted from the immediate:

A futuristic electrical jellyfish robotic designed to be self-sufficient and dwelling below the ocean, water or elastic glass-like materials, form shifter, technical design, perspective industrial design, copic type, cinematic excessive element, ultra-detailed, moody grading, white background.Didem Gürdür Broo/Midjourney

As I attempted extra exact prompts, the designs began to look extra in sync with my imaginative and prescient. I then performed with totally different textures and supplies, till I used to be pleased with a number of of the designs.

It was thrilling to see the outcomes of my preliminary prompts in only a few minutes. However it took hours to make modifications, reiterate the ideas, strive new prompts, and mix the profitable components right into a completed design.

Co-designing with AI was an illuminating expertise. A immediate can cowl many attributes, together with the topic, medium, atmosphere, shade, and even temper. An excellent immediate, I realized, wanted to be particular as a result of I needed the design to serve a specific function. However, I needed to be stunned by the outcomes. I found that I wanted to strike a stability between what I knew and needed, and what I didn’t know or couldn’t think about however

would possibly need. I realized that something that isn’t specified within the immediate may be randomly assigned to the picture by the AI platform. And so if you wish to be stunned about an attribute, then you’ll be able to go away it unsaid. However in order for you one thing particular to be included within the consequence, then it’s important to embody it within the immediate, and also you should be clear about any context or particulars which can be essential to you. You too can embody directions in regards to the composition of the picture, which helps loads for those who’re designing an engineering product.

It’s practically unattainable to regulate the result of generative AI

As a part of my investigations, I attempted to see how a lot I might management the co-creation course of. Typically it labored, however more often than not it failed.

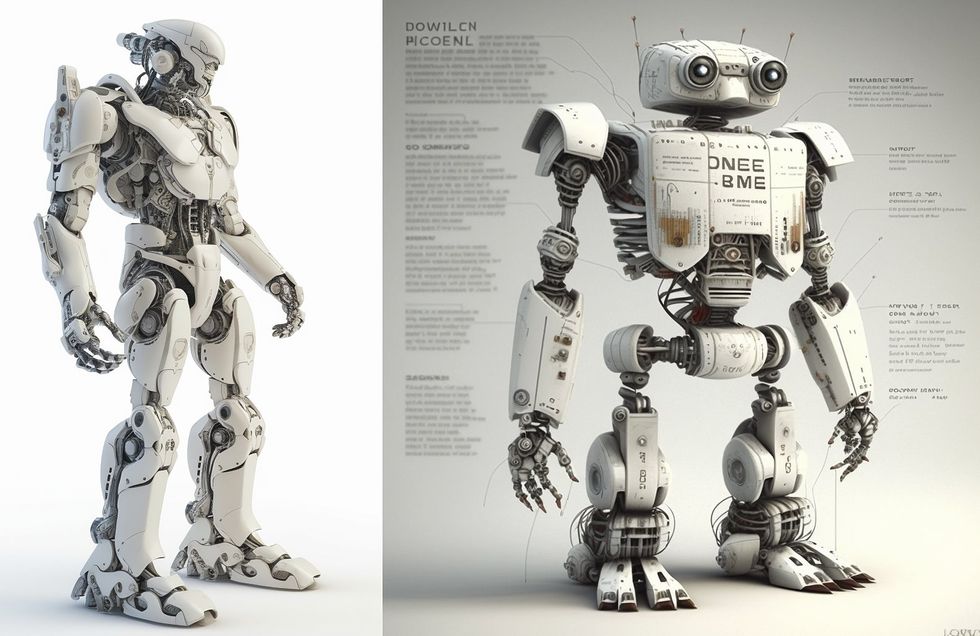

To generate a picture of a humanoid robot [left], the writer began with the easy immediate:

To generate a picture of a humanoid robot [left], the writer began with the easy immediate:

Humanoid robotic, white background.

She then tried to include cameras for eyes into the humanoid design [right], utilizing this immediate:

Humanoid robotic that has digicam eyes, technical design, add textual content, full physique perspective, robust arms, V-shaped physique, cinematic excessive element, gentle background.Didem Gürdür Broo/Midjourney

The textual content that seems on the humanoid robotic design above isn’t precise phrases; it’s simply letters and symbols that the picture generator produced as a part of the technical drawing aesthetic. After I prompted the AI for “technical design,” it steadily included this pseudo language, possible as a result of the coaching knowledge contained many examples of technical drawings and blueprints with similar-looking textual content. The letters are simply visible components that the algorithm associates with that type of technical illustration. So the AI is following patterns it acknowledged within the knowledge, regardless that the textual content itself is nonsensical. That is an innocuous instance of how these mills undertake quirks or biases from their coaching with none true understanding.

After I tried to alter the jellyfish to an octopus, it failed miserably—which was stunning as a result of, with apologies to any marine biologists studying this, to an engineer, a jellyfish and an octopus look fairly related. It’s a thriller why the generator produced good outcomes for jellyfish however inflexible, alien-like, and anatomically incorrect designs for octopuses. Once more, I assume that that is associated to the coaching datasets.

The writer used this immediate to generate photos of an octopus-like robotic:

The writer used this immediate to generate photos of an octopus-like robotic:

Futuristic electrical octopus robotic, technical design, perspective industrial design, copic type, cinematic excessive element, moody grading, white background.

The 2 backside photos have been created a number of months after the highest photos and are barely much less crude trying however nonetheless don’t resemble an octopus.

Didem Gürdür Broo/Midjourney

After producing a number of promising jellyfish robotic designs utilizing AI picture mills, I reviewed them with my staff to find out if any points might inform the event of actual prototypes. We mentioned which aesthetic and purposeful components would possibly translate properly into bodily fashions. For instance, the curved, umbrella-shaped tops in lots of photos might encourage materials choice for the robotic’s protecting outer casing. The flowing tentacles might present design cues for implementing the versatile manipulators that might work together with the marine atmosphere. Seeing the totally different supplies and compositions within the AI-generated photos and the summary, inventive type inspired us towards extra whimsical and inventive eager about the robotic’s total kind and locomotion.

Whereas we in the end determined to not copy any of the designs immediately, the natural shapes within the AI artwork sparked helpful ideation and additional analysis and exploration. That’s an essential consequence as a result of as any engineering designer is aware of, it’s tempting to begin to implement issues earlier than you’ve performed sufficient exploration. Even fanciful or impractical computer-generated ideas can profit early-stage engineering design, by serving as tough prototypes, as an example.

Tim Brown, CEO of the design agency IDEO, has famous that such prototypes “gradual us down to hurry us up. By taking the time to prototype our concepts, we keep away from expensive errors equivalent to turning into too advanced too early and sticking with a weak concept for too lengthy.”

Even an unsuccessful consequence from generative AI may be instructive

On one other event, I used picture mills to attempt to illustrate the complexity of communication in a wise metropolis.

Usually, I might begin to create such diagrams on a whiteboard after which use drawing software program, equivalent to Microsoft Visio, Adobe Illustrator, or Adobe Photoshop, to re-create the drawing. I would search for current libraries that include sketches of the elements I wish to embody—automobiles, buildings, visitors cameras, metropolis infrastructure, sensors, databases. Then I might add arrows to indicate potential connections and knowledge flows between these components. For instance, in a smart-city illustration, the arrows might present how visitors cameras ship real-time knowledge to the cloud and calculate parameters associated to congestion earlier than sending them to related vehicles to optimize routing. Creating these diagrams requires fastidiously contemplating the totally different programs at play and the data that must be conveyed. It’s an intentional course of targeted on clear communication somewhat than one in which you’ll be able to freely discover totally different visible kinds.

The writer tried utilizing picture mills to indicate advanced data stream in a wise metropolis, primarily based on this immediate:

The writer tried utilizing picture mills to indicate advanced data stream in a wise metropolis, primarily based on this immediate:

Determine that reveals the complexity of communication between totally different elements on a wise metropolis, white background, clear design.Didem Gürdür Broo/Midjourney

I discovered that utilizing an AI picture generator offered extra inventive freedom than the drawing software program does however didn’t precisely depict the advanced interconnections in a wise metropolis. The outcomes above characterize lots of the particular person components successfully, however they’re unsuccessful in displaying data stream and interplay. The picture generator was unable to know the context or characterize connections.

After utilizing picture mills for a number of months and pushing them to their limits, I concluded that they are often helpful for exploration, inspiration, and producing speedy illustrations to share with my colleagues in brainstorming classes. Even when the pictures themselves weren’t sensible or possible designs, they prompted us to think about new instructions we would not have in any other case thought-about. Even the pictures that didn’t precisely convey data flows nonetheless served a helpful function in driving productive brainstorming.

I additionally realized that the method of co-creating with generative AI requires some perseverance and dedication. Whereas it’s rewarding to acquire good outcomes shortly, these instruments grow to be troublesome to handle in case you have a selected agenda and search a selected consequence. However human customers have little management over AI-generated iterations, and the outcomes are unpredictable. After all, you’ll be able to proceed to iterate in hopes that you just’ll get a greater consequence. However at current, it’s practically unattainable to regulate the place the iterations will find yourself. I wouldn’t say that the co-creation course of is solely led by people—or not this human, at any price.

I observed how my very own pondering, the way in which I talk my concepts, and even my perspective on the outcomes modified all through the method. Many instances, I started the design course of with a specific characteristic in thoughts—for instance, a selected background or materials. After some iterations, I discovered myself as a substitute selecting designs primarily based on visible options and supplies that I had not laid out in my first prompts. In some situations, my particular prompts didn’t work; as a substitute, I had to make use of parameters that elevated the inventive freedom of the AI and decreased the significance of different specs. So, the method not solely allowed me to alter the result of the design course of, but it surely additionally allowed the AI to alter the design and, maybe, my pondering.

The picture mills that I used have been up to date many instances since I started experimenting, and I’ve discovered that the newer variations have made the outcomes extra predictable. Whereas predictability is a damaging in case your principal function is to see unconventional design ideas, I can perceive the necessity for extra management when working with AI. I feel sooner or later we’ll see instruments that may carry out fairly predictably inside well-defined constraints. Extra importantly, I count on to see picture mills built-in with many engineering instruments, and to see folks utilizing the information generated with these instruments for coaching functions.

After all, the usage of AI picture mills raises critical moral points. They threat amplifying demographic and different

biases in training data. Generated content material can unfold misinformation and violate privateness and mental property rights. There are a lot of reliable issues in regards to the impacts of AI generators on artists’ and writers’ livelihoods. Clearly, there’s a want for transparency, oversight, and accountability relating to knowledge sourcing, content material era, and downstream utilization. I consider anybody who chooses to make use of generative AI should take such issues critically and use the mills ethically.

If we are able to be certain that generative AI is getting used ethically, then I consider these instruments have a lot to supply engineers. Co-creation with picture mills may also help us to discover the design of future programs. These instruments can shift our mindsets and transfer us out of our consolation zones—it’s a approach of making a bit of little bit of chaos earlier than the trials of engineering design impose order. By leveraging the facility of AI, we engineers can begin to suppose in a different way, see connections extra clearly, take into account future results, and design progressive and sustainable options that may enhance the lives of individuals around the globe.

From Your Web site Articles

Associated Articles Across the Net

[ad_2]